Last week I had an interesting exchange with a colleague. We were discussing how some views and view models are going to interact in a WPF application we’re building, and I was proposing an approach which involves composition of models within parent models. Apparently my colleague is vehemently opposed to this idea, though I’m really not certain why.

It’s no secret that the majority of my experience is as a web developer, and in ASP.NET MVC I use composite models all the time. That is, I may have a view which is a host of several other views and I bind that view to a model which is itself a composition of several other models. It doesn’t necessarily need to be a 1:1 ratio between the views and the models, but in most clean designs that ends up happening if for no other reason than both the views and the models represent some atomic or otherwise discreet and whole business concept.

The tooling has no problem with this. You pass the composite model to the view, then in the view where you include your “partial” views (which, again, are normal views from their own perspective) you supply to that partial view the model property which corresponds to that partial view’s expected model type. This works quite well and I think distributes functionality into easily re-usable components within the application.

My colleague, however, asserted that this is “tight coupling.” Perhaps there’s some aspect of the MVVM pattern with which I’m unaware? Some fundamental truth not spoken in the pattern itself but known to those who often use it? If there is, I sure hope somebody enlightens me on the subject. Or perhaps it has less to do with the pattern and more to do with the tooling used in constructing a WPF application? Again, please enlighten me if this is the case.

I just don’t see the tight coupling. Essentially we have a handful of models, let’s call them Widget, Component, and Thing. And each of these has a corresponding view for the purpose of editing the model. Now let’s say our UI involves a single large “page” for editing each model. Think of it like stepping through a wizard. In my mind, this would call for a parent view acting as a host for the three editor views. That parent view would take care of the “wizard” bits of the UX, moving from one panel to another in which the editor views reside. Naturally, then, this parent view would be bound to a parent view model which itself would consist of some properties for the wizard flow as well as properties for each type being edited. A Widget, a Component, and a Thing.

What is being tightly coupled to what in this case?

Is the parent view model coupled to the child view models? I wouldn’t think so. Sure, it has properties of the type of those view models. In that sense I suppose you could say it has a dependency on them. But if we were to avoid such a dependency then we wouldn’t be able to build objects in an object-oriented system at all, save for ones which only recursively have properties of their own type. (Which would be of very limited use.) If a Wizard shouldn’t have a property of type Widget then why would it be acceptable for it to have a property of type string? Or int? Those are more primitive types, but types nonetheless. Would we be tightly coupling the model to the string type by including such a property?

Certainly not, primarily because the object isn’t terribly concerned with the value of that string. Granted, it may require specific string values in order to exhibit specific behaviors or perform specific actions. But I would contend that if the object is provided with a string value which doesn’t meet this criteria it should still be able to handle the situation in some meaningful, observable, and of course testable way. Throw an ArgumentException for incorrect values, silently be unusable for certain actions, anything of that nature as the logic of the system demands. You can provide mock strings for testing, just like you can provide mock Widgets for testing. (Though, of course, you probably wouldn’t need a mocking framework for a string value.)

Conversely, are the child view models tightly coupled to the parent view model? Again, certainly not. The child view models in this case have no knowledge whatsoever of the parent view model. Each can be used independently with its corresponding view regardless of some wizard-style host. It’s by coincidence alone that the only place they’re used in this particular application (or, rather, this particular user flow or user experience) is in this wizard flow. But the components themselves are discreet and separate and can be tested as such. Given the simpler example of an object with a string property, I think we can agree that the string type itself doesn’t become coupled to that object.

So… Am I missing something? I very much contend that composition is not coupling. Indeed, composition is a fundamental aspect of object-oriented design in general. We wouldn’t be able to build rich object systems without it.

Monday, April 28, 2014

Monday, April 21, 2014

Say Fewer, Better Things

Last week, while beginning a new project with a new client, an interesting observation was made of me by the client. As is usual with a new project, the week was filled with meetings and discussions. And more than once the project sponsor explicitly said to me, "Feel free to jump in here as well." Not in a snarky way mind you, he just wanted to make sure I'm not waiting to speak and that my insights are brought to the group. At one point he said, "I take it you're the strong silent type, eh?"

Well, I like to think so.

In general it got me thinking, though. It's no secret that I'm very much an introvert, and that's okay. So for the most part I have a natural tendency to prefer not speaking over speaking. But the more I think about it, the more I realize that there's more to it than that.

As it turns out, in a social gathering I'm surprisingly, well, social. I'm happy to crack a joke or tell a story, as long as I don't become too much a center of attention. If I notice that happening, I start to lose my train of thought. In small groups though it's not a problem. In a work setting, however, I tend not to jump in so much. It's not that I'm waiting for my turn to speak, it's that I'm waiting for my turn to add something of value.

This is intentional. And I think it's a skill worth developing.

I've had my fair share of meetings with participants who just like to be the center of the meeting. For lack of a better description, they like to hear themselves talk. The presence of this phenomenon varies wildly depending on the client/project. (Luckily my current project is staffed entirely by professionals who are sharp and to the point, for which I humbly thank the powers that be.) But I explicitly make it a point to try not to be this person.

Understand that this isn't because I don't want to speak. This is because I do want to listen. I don't need (or even really want) to be the center of attention. I don't need to "take over" the meeting. My goal is to simply contribute value. And I find that I can more meaningfully contribute value through listening than through speaking.

I'll talk at some point. Oh, I will definitely talk. And believe me, I'm full of opinions. But in the scope of a productive group discussion are all of those opinions relevant? Not really. So I can "take that offline" in most cases. A lot of that, while potentially insightful and valuable, doesn't necessarily add value to the discussion at hand. So rather than take the value I already know and try to adjust the meeting/discussion/etc. to fit my value, I'd rather absorb the meeting/discussion/etc. and create new value which I don't already know which targets the topic at hand.

That is, rather than steer the meeting toward myself, I'd rather steer myself toward the meeting. And doing so involves more listening than talking. Sometimes a lot more.

In doing so, I avoid saying too much. Other people in the meeting can point out the obvious things, or can brainstorm and openly steer their trains of thought. What I'll do is follow along and observe, and when I have a point to make I'll make it. I find this maximizes the insightfulness and value of my points, even if they're few and far between. And that's a good thing. I'd rather be the guy who made one point which nobody else had thought of than the guy who made a lot of points which everybody else already knew. The latter may have been more the center of attention, but the former added more value.

Listen. Observe. Meticulously construct a mental model of what's being discussed. Examine that model. And when the room is stuck on a discussion, pull from that model a resolution to that discussion. After all, concluding a discussion with a meaningful resolution is a lot more valuable than having participated in that discussion with everybody else.

Well, I like to think so.

In general it got me thinking, though. It's no secret that I'm very much an introvert, and that's okay. So for the most part I have a natural tendency to prefer not speaking over speaking. But the more I think about it, the more I realize that there's more to it than that.

As it turns out, in a social gathering I'm surprisingly, well, social. I'm happy to crack a joke or tell a story, as long as I don't become too much a center of attention. If I notice that happening, I start to lose my train of thought. In small groups though it's not a problem. In a work setting, however, I tend not to jump in so much. It's not that I'm waiting for my turn to speak, it's that I'm waiting for my turn to add something of value.

This is intentional. And I think it's a skill worth developing.

I've had my fair share of meetings with participants who just like to be the center of the meeting. For lack of a better description, they like to hear themselves talk. The presence of this phenomenon varies wildly depending on the client/project. (Luckily my current project is staffed entirely by professionals who are sharp and to the point, for which I humbly thank the powers that be.) But I explicitly make it a point to try not to be this person.

Understand that this isn't because I don't want to speak. This is because I do want to listen. I don't need (or even really want) to be the center of attention. I don't need to "take over" the meeting. My goal is to simply contribute value. And I find that I can more meaningfully contribute value through listening than through speaking.

I'll talk at some point. Oh, I will definitely talk. And believe me, I'm full of opinions. But in the scope of a productive group discussion are all of those opinions relevant? Not really. So I can "take that offline" in most cases. A lot of that, while potentially insightful and valuable, doesn't necessarily add value to the discussion at hand. So rather than take the value I already know and try to adjust the meeting/discussion/etc. to fit my value, I'd rather absorb the meeting/discussion/etc. and create new value which I don't already know which targets the topic at hand.

That is, rather than steer the meeting toward myself, I'd rather steer myself toward the meeting. And doing so involves more listening than talking. Sometimes a lot more.

In doing so, I avoid saying too much. Other people in the meeting can point out the obvious things, or can brainstorm and openly steer their trains of thought. What I'll do is follow along and observe, and when I have a point to make I'll make it. I find this maximizes the insightfulness and value of my points, even if they're few and far between. And that's a good thing. I'd rather be the guy who made one point which nobody else had thought of than the guy who made a lot of points which everybody else already knew. The latter may have been more the center of attention, but the former added more value.

Listen. Observe. Meticulously construct a mental model of what's being discussed. Examine that model. And when the room is stuck on a discussion, pull from that model a resolution to that discussion. After all, concluding a discussion with a meaningful resolution is a lot more valuable than having participated in that discussion with everybody else.

Thursday, April 10, 2014

Agile and Branching

I've recently interacted with an architect who made a rather puzzling claim in defense of his curious and extraordinarily inefficient source control branching strategy. He said:

First of all, before we discuss branching in general, let's dispense with the provable falsehoods of his statement. The "core principles" of agile are, after all, highly visible for all to see:

Individuals and interactions over processes and tools

The intent here is pretty clear. This "core principle" is to favor the team members and how they interact, not to favor some particular tool or process. If a process which works well with one team doesn't work well with another team, that other team shouldn't adopt or adhere to that process. Put the team first, not the process or the tool.

Source control is a tool. Branching is a process. To favor such things despite a clear detriment to the team and to the business value is to explicitly work against the very first principle of agile. Indeed, not only does agile as a software development philosophy make no claim about tools and processes, it explicitly says not to do so.

Working software over comprehensive documentation

In this same environment I've often heard people ask that these processes and strategies at least be documented so that others can understand them. While they may put some balm on the wound in this company, it's not really a solution. If you document a fundamentally broken process, you haven't fixed anything.

The "core principle" in this case is to focus on delivering a working product. Part of doing that is to eliminate any barriers to that goal. If the developers can't make sense of the source control, that's a barrier.

Customer collaboration over contract negotiation

In this case the "customer" is the rest of the business. The "contract" is the requirements given to the development team for software that the business needs. This "negotiation" takes the form of any and all meetings in which the development team and the business team plan out the release strategy so that it fits all of the branching and merging that's going to take place.

That negotiation is a lie, told by the development team, and believed by the rest of the business. There is no need for all of this branching and merging other than to simply follow somebody's process or technical plan. It provides no value to the business.

Responding to change over following a plan

And those plans, so carefully negotiated above, become set in stone. Deviating from them causes significant effort to be put forth so that the tools and processes (source control and branching) can accommodate the changes to the plan.

So that's all well and good for the "core principles" of agile, but what about source control branching? Why is it such a bad thing?

The problem with branching isn't the branching per se, it's the merging. What happens when you have to merge?

Value comes in the form of features added to the system. Or bugs fixed in the system. Or performance improved in the system. And time spent merging code is time not spent delivering this value. It's overhead. Cruft. And the "core principles" of agile demand that cruft be eliminated.

The business as a whole isn't thinking about how to best write software, or how to follow any given software process. The business as a whole is thinking about how to improve their products and services and maximize profit. Tools and processes related to software development are entirely unimportant to the business. And those things should never be valued above the delivery of value to the business.

"One of the core principles of agile is to have as many branches as possible."I didn't have a reply to this statement right away. It took a while for the absurdity of it to really sink in. He may as well have claimed that a core principle of agile is that the oceans are made of chocolate. My lack of response would have been similar, and for the same reason.

First of all, before we discuss branching in general, let's dispense with the provable falsehoods of his statement. The "core principles" of agile are, after all, highly visible for all to see:

We are uncovering better ways of developing software by doing it and helping others do it. Through this work we have come to value:Pretty succinct. So let's look at them one-by-one in this case:

That is, while there is value in the items on the right, we value the items on the left more.

- Individuals and interactions over processes and tools

- Working software over comprehensive documentation

- Customer collaboration over contract negotiation

- Responding to change over following a plan

Individuals and interactions over processes and tools

The intent here is pretty clear. This "core principle" is to favor the team members and how they interact, not to favor some particular tool or process. If a process which works well with one team doesn't work well with another team, that other team shouldn't adopt or adhere to that process. Put the team first, not the process or the tool.

Source control is a tool. Branching is a process. To favor such things despite a clear detriment to the team and to the business value is to explicitly work against the very first principle of agile. Indeed, not only does agile as a software development philosophy make no claim about tools and processes, it explicitly says not to do so.

Working software over comprehensive documentation

In this same environment I've often heard people ask that these processes and strategies at least be documented so that others can understand them. While they may put some balm on the wound in this company, it's not really a solution. If you document a fundamentally broken process, you haven't fixed anything.

The "core principle" in this case is to focus on delivering a working product. Part of doing that is to eliminate any barriers to that goal. If the developers can't make sense of the source control, that's a barrier.

Customer collaboration over contract negotiation

In this case the "customer" is the rest of the business. The "contract" is the requirements given to the development team for software that the business needs. This "negotiation" takes the form of any and all meetings in which the development team and the business team plan out the release strategy so that it fits all of the branching and merging that's going to take place.

That negotiation is a lie, told by the development team, and believed by the rest of the business. There is no need for all of this branching and merging other than to simply follow somebody's process or technical plan. It provides no value to the business.

Responding to change over following a plan

And those plans, so carefully negotiated above, become set in stone. Deviating from them causes significant effort to be put forth so that the tools and processes (source control and branching) can accommodate the changes to the plan.

So that's all well and good for the "core principles" of agile, but what about source control branching? Why is it such a bad thing?

The problem with branching isn't the branching per se, it's the merging. What happens when you have to merge?

- New bugs appear for no reason

- Code from the same files changed by multiple people has conflicts to be manually resolved

- You often need to re-write something you already wrote and was already working

- If the branch was separated for a long time, you and team members need to re-address code that was written a long time ago, duplicating effort that was already done

- The list of problems goes on and on...

Merging is painful. But, you might say, if the developers are careful then it's a lot less painful. Well, sure. That may be coincidentally true. But how much can we rely on that? Taken to an extreme to demonstrate the folly of it, if the developers were "careful" then the software would never have bugs or faults in the first place, right?

Being careful isn't a solution. Being collaborative is a solution. Branches means working in isolated silos, not interacting with each other. If code is off in a branch for months at a time, it will then need to be re-integrated with other code. It already works, but now it needs to be made to work again. If we simply practice continuous integration, we can make it work once.

This is getting a bit too philosophical, so I'll step back for a moment. The point, after all, isn't any kind of debate over what have become industry buzz-words ("agile", "continuous integration", etc.) but rather the actual delivery of value to the business. That's why we're here in the first place. That's what we're doing. We don't necessarily write software for a living. We deliver business value for a living. Software is simply a tool we use to accomplish that.

So let's ask a fundamental question...

What business value is delivered by merging branched code?The answer is simple. None. No business value at all. Unless the actual business model of the company is to take two pieces of code, merge them, and make money off of that then there is no value in the act of merging branches. You can have those meetings and make those lies all you like, but all you're doing is trying to justify a failing in your own design. (By the way, those meetings further detract from business value.)

Value comes in the form of features added to the system. Or bugs fixed in the system. Or performance improved in the system. And time spent merging code is time not spent delivering this value. It's overhead. Cruft. And the "core principles" of agile demand that cruft be eliminated.

The business as a whole isn't thinking about how to best write software, or how to follow any given software process. The business as a whole is thinking about how to improve their products and services and maximize profit. Tools and processes related to software development are entirely unimportant to the business. And those things should never be valued above the delivery of value to the business.

Thursday, April 3, 2014

RoboCop-Driven Development

How many cooks are in your kitchen? Or, to use a less politically-correct analogy, what's the chief-to-indian ratio in your village? If you're trying to count them, there are too many.

There are as many ways to address this problem as there are stars in the sky. (Well, I live in a populated area, so we don't see a whole lot of stars. But there are some, I'm sure of it.) There can be a product owner at whom the buck stops for requirements, there can be quick feedback cycles to identify and correct problems as early as possible, there can be drawn-out analysis and charting of requirements to validate them, prototyping to validate them, etc.

But somehow, we often find ourselves back in the position that there are too many cooks in the kitchen. Requirements are coming from too many people (or, more appropriately, too many roles). This doesn't just end up killing the Single Responsibility Principle, it dances on the poor guy's grave.

Let me tell you a story. Several years ago I was a developer primarily working on a business-to-business web application for a private company. The company, for all its failings, was a stellar financial success. Imagine a dot-com-era start-up where the money never ran dry. I think it was this "can't lose" mentality which led to the corporate culture by which pretty much anybody could issue a requirement to the software at any time for any reason.

One day a requirement made its way to my inbox. On a specific page in the application there was a table of records, and records which meet a specific condition need to be highlighted to draw the attention of the user. The requirement was to make the text of those table rows red. A simple change to say the least. And very much a non-breaking change. So there wasn't really a need to involve the already over-burdened QA process. The change was made, developer-tested, and checked in. Another developer could even have code-reviewed it, had that process been considered necessary.

Around the same time, a requirement from somebody else in the business made its way to another developer's inbox. On a specific page in the application there was a table of records, and records which meet a specific condition need to be highlighted to draw the attention of the user. The requirement was to make the background of those table rows red. A simple change to say the least. And very much a non-breaking change. So there wasn't really a need to involve the already over-burdened QA process. The change was made, developer-tested, and checked in. Another developer could even have code-reviewed it, had that process been considered necessary.

Both requirements were successfully met. And, yes, the changes were successfully promoted to production. (Notice that I'm using a more business-friendly definition of the word "successfully" here.) The passive-aggressive side of me insists that there's no problem here. Edicts were issued and were completed as defined. The professional side of me desperately wants to fix this problem every time I see it.

Sometimes requirements, though individually well-written and well-intentioned, don't make sense when you put them together. Sometimes they can even be mutually exclusive. The psychology of this can be difficult to address. We're talking about two intelligent and reasonable experts in their field. Each individually put some measure of thought and care into his or her own requirement. That requirement makes sense, and it's necessary for the growth of the system to meet business needs. Imagine trying to explain to this person that their requirement doesn't make sense. Imagine trying to say, "You are right. And you over there are also right. But together you are wrong."

To a developer, that conversation always sounds like this:

Someone wanted the lines to be red. Somebody else wanted them to be drawn with specific inks. Somebody else wanted there to be seven of them. Somebody else wanted them to be perpendicular to each other. And so on and so forth. In the video all of those requirements came filtered through what appeared to be a product owner, but the concept is the same. And to a developer there isn't always a meaningful difference. "You" made this requirement can be the singular "you" or the plural "you", it's just semantics.

Remember that "business-friendly" definition of "successful"? That is, we can all work diligently to achieve every goal we set forth as a business, and still not get anywhere. The goals were "successfully" met, but did the product successfully improve? We did what we set out to do, and we pat ourselves on the back for it. All the while, nothing got done. Each individual goal was reached, but if you stand back and look at the bigger picture you clearly see that it was all a great big bloody waste of time.

(If your hands-on developers can see the big picture and your executives can't, something's wrong.)

Creators of fiction have presented us with this group-think phenomenon many times. My personal favorite, for reasons unknown to me, is RoboCop 2. If you'll recall, RoboCop had a small set of prime directives. These were his standing orders which he was physically incapable of violating. They were hard-coded into his system, and all subsequent orders must at least maintain these:

- Serve the public trust

- Protect the innocent

- Uphold the law

- (There was a fourth, "classified" directive placed there by the suits who funded his construction, preventing him from ever acting against them personally. That was later removed.)

There's lots of precedent for "directives" such as these. Much of the inspiration clearly comes from Isaac Asimov's three laws of robotics. Hell, even in real life we have similar examples, such as the general orders presented to soldiers in the US Army during basic training. It's an exercise in simplicity. And, of course, life is never that simple. Asimov's stories demonstrated various ways in which the Three Laws didn't work as planned (or worked completely differently than planned, such as when a robot invented faster-than-light travel). And I'm sure any soldier will tell you some stories of real life after training.

But then fast-forward to RoboCop 2, when the special interest groups got involved. It became political. And his 3 directives quickly ballooned into over 300 directives. Each of which, I'm sure, was well-intentioned. But when you put all of them together, he became entirely unable to function. He had too many conflicting requirements. Too many individual "rights" added up to one great big "wrong."

(Parents of the world would be perplexed. Two wrongs don't make a right, but two rights can very easily make a wrong.)

Is your business environment a dizzying maelstrom of special interest groups? Do they each have an opportunity to individually contribute to the product? Is this helping the product? Which is more important to the business... Appeasing every group individually or building a compelling product?

Tuesday, April 1, 2014

Scientists Have Been Doing TDD for Centuries

I've recently been watching Cosmos: A Spacetime Odyssey (and I don't have the words to describe how excellent it is), and the subject came up with my children about what science really is. Fundamentally, aside from all the various beliefs and opinions that people have, how can one truly know what is "science" and what is not.

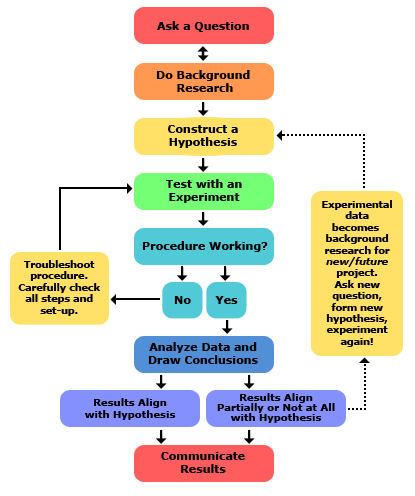

A Google search on "the scientific method" (which I think we can agree is the core foundation of all scientific study) lands me on a site called Science Buddies, which presents this handy graphic to visualize the process:

The answer turns out to be very simple. The science is in the test. Ideas, opinions, even reason and logic themselves are all ancillary concepts to the test. If it isn't tested (or for whatever reason can't be tested, such as supernatural beliefs), then it isn't science. That isn't to say that it's wrong or bad, simply that it isn't science.

Science is the act of validating something with a test.You can believe whatever you like. You can even apply vast amounts of reason and logic and irrefutably derive a very sensible conclusion about something. But if you don't validate it with a test, it's not science. It's conjecture, speculation. And as I like to say at work, "Speculation is free, and worth every penny."

A Google search on "the scientific method" (which I think we can agree is the core foundation of all scientific study) lands me on a site called Science Buddies, which presents this handy graphic to visualize the process:

This seems similar enough to what I remember from grammar school. Notice a few key points:

- Attention is drawn to the bright green box. (Green means good, right?) That box is the center of the whole process. That box is the test being conducted.

- The very first step is "Ask a Question." Not define what you think the answer is, not speculate on possible outcomes, but simply ask the question.

- There's a cycle to the process, using the tests to validate each iteration of the cycle. The results of the tests are fed back into the process to make further progress.

This reminds me of another cycle of testing:

Even the coloring is the same. The first step, in red, is to ask the question. The central step, in green, is to run a test against the implementation to produce results, validating both the test and the implementation. The loop back step, in blue, is to adjust the system based on the results of the test and return to the start of the cycle, using the test and its results to further refine and grow the next test(s).

TDD is the Scientific Method applied to software development.The two processes share core fundamental values. Values such as:

- Begin by asking a question. The purpose of the process is not to answer the question, but to arrive at a means of validating an answer. Answers can change over time, the key value is in being able to validate those answers.

- Tests must be repeatable. Other team members, other teams, automated systems, etc. must be able to set up and execute the test and observe the results. If the results are inconsistent then the tests themselves are not valid and must be refined, removing variables and dependencies to focus entirely on the subject being tested.

- The process is iterative and cyclic. Value is attained not immediately, but increasingly over time. Validating something once is only the first step in the process, if the same thing can't be validated repeatedly then it wasn't valid in the first place, the initial result was a false positive. Only through repetition and analysis can one truly be confident in the result.

We've all heard the analogy that TDD is similar to double-entry bookkeeping. And that analogy still holds true. This one is just resonating with me a lot more at the moment. It's not just the notion that the code and the tests mutually confirm each other, but that the fundamental notion of being able to validate something with a repeatable test is critical to truly understanding that something. Anything else is just speculation.

Subscribe to:

Posts (Atom)